Applications of Virtual Reality to Improve Design Reviews and Code Evaluation in Construction

Applications of Virtual Reality to Improve Design Reviews and Code Evaluation in Construction

Applications of Virtual Reality to Improve Design Reviews and Code Evaluation in Construction

Fadi Castronovo

Assistant Professor of Engineering

Course Name & Description: CMGT 345 Building Codes and Commissioning - CMGT 345 is a course that aims at teaching construction management students Building Codes for structural, mechanical, electrical, and plumbing. It also introduces students to building safety and accessibility, energy efficiency (Title 24) and commissioning. The course is a 3 unit semester lecture-based course that has met in the past in a regular desk and chair classroom environment.

Project Abstract: Dr. Castronovo implemented a virtual reality educational simulation game. This games aim to support students in developing problem-solving skills related to the architecture, engineering, and construction (AEC) industry while stimulating their motivation and engagement. The game is the Design Review Simulator (DSR). The DSR is a virtual reality educational game that was designed to support construction and architecture students in developing analysis, review, and evaluation skills of design and construction of residential projects. Dr. Castonovo plans on expanding the DSR to include additional modules to teach about steel and concrete structures in future research. Additionally, the DSR was utilized to learn about construction methods as they related to the international building codes. As part of the course redesign Dr. Castronovo developed instructional material to support the assessment of the learning objectives. Therefore, the game will not be used only as an engaging experience, but it will also be used to teach students problem-solving skills. With the instructional material, Dr. Castronovo will be assessing the students' learning and the value of the software in the classroom.

Keywords/Tags: Construction, Architecture, Design, Review, Virtual Reality

Instructional Delivery: In-Class

Pedagogical Approaches: Flipped, Active/Inquiry-based Learning, VR.

About the LIT Redesign

Stage 1

1. Background on the Redesign

1.1. Why Redesign your Course?

CMGT 345 is a course that aims at teaching construction management students Building Codes for structural, mechanical, electrical, and plumbing. It also introduces students to building safety and accessibility, energy efficiency (Title 24) and commissioning. The course is a 3 unit semester lecture-based course that has met in the past in a regular desk and chair classroom environment. Starting in the Fall of 2019, the course will be taught in the Visualization and Immersion Classroom (VIC), which is suited for the delivery of large-scale educational interventions with virtual reality (VR). The VIC is a computer laboratory equipped with 48 computers, 10 of which are virtual reality ready high-end computers capable of running the latest industry-standard software. It also possesses ten virtual reality headsets for educational experiences in virtual spaces, capable of rendering virtual environments in real-time.

Dr Castronovo in the Visualization and Immersion Classroom (VIC)

While the course does not possess a lab component, the purpose of this course redesign is to include innovative technology-based pedagogical techniques. The support provided by the Lab Innovations allowed Dr. Castronovo to redesign the course to include virtual-reality based activities that would enhance the students' learning of evaluation, reviewing, and analysis skills.

1.2. High Demand/Low Success/Facilities Bottleneck Issues|

While the CMGT 345 course does not have a high rate of repeatable grades, it is in need to include active learning solutions. The material can be quite challenging to process and relate to real-world scenarios. The international building codes are prescriptive study materials with a wide variety of rules and regulations which the students need to absorb in a short period. Additionally, the content is hard to relate to the real world, and the students need to develop practical skills that allow them to be competitive in the job market. Therefore, the CMGT 345 needed to include pedagogical methods that allow students to experience skills for the industry. However, methods such as field trips and guest lectures only allow students to be spectators and do not possess the necessary level of interaction to gain problem-solving skills. Therefore, the software solution that is discussed in the following section will be excellent for the redesign of the course to become an active learning and engaging environment. With the support provided by the Lab Innovations with Technology proposal, Dr. Castronovo will be able to redesign the course to include the innovative technological software and hardware solutions to enhance the learning experience. In particular, Dr. Castronovo has set the following objectives for the proposal: 1) include active learning interventions, 2) develop the Design Review Simulator VR software assessment material that is aligned to the course material, 3) evaluate the impact of such software in the classroom, and 4) engage undergraduate students in research activities.

Course History / Background

The course is a third-year course that Construction Management students need to take as a prerequisite for several other classes. The course used to be taught by another instructor until the Fall of 2017. Dr. Castronovo was assigned to teach the course in the Fall of 2018. Based on the challenges discussed earlier, Dr. Castronovo decided to start the redesign of the course.

About the Students and Instructor(s)

Stage 2

2. Student Characteristics

The course is a third-year course that Construction Management students need to take as a prerequisite for several other classes. There is only one prerequisite to the course, CMGT 270 Construction Methods. In CMGT 345 the students are exposed for the first time to the codes, rules, and regulations that shape our built environments. Our students are quite different from typical students, as they are required to attend internships on Mondays, Wednesdays, and Fridays. Most of our classes are Tuesdays and Thursdays and in the evenings. This causes a serious challenge with student reading the material necessary to pass the class. This makes the lecture-based style of teaching not as effective as the students are not absorbing the material as effectively. The students have expressed a challenge in connecting the text of the Building Codes to the real-world.

3. Advice I Give my Students to be Successful

The advice that Dr. Castronovo gives to the students is to come to every class as the material that Dr. Castronovo covers in the class is going to be the key to success in meeting the module and course learning objectives and passing the assessments. Additionally, Dr. Castronovo stresses to the students that they must read the book as knowing the Building Codes is an essential part of the assessments.

4. Impact of Student Learning Outcomes/Objectives (SLOs) on Course Redesign

The course has the following student learning outcomes:

- Understand different types of occupancies and buildings

- Identify the most relevant elements of fire safety, means of egress and accessibility, and energy efficiency provisions established by the code.

- Understand the permitting process and effectively interact with building officers.

- Effectively identify code non-compliances and propose code approved solutions.

4.1. Alignment of SLOs With LIT Redesign

The goal of the redesign is to 1) include active learning interventions, 2) develop the Design Review Simulator VR software assessment material that is aligned to the course material, 3) evaluate the impact of such software in the classroom, and 4) engage undergraduate students in research activities. The redesign, of course, will include three main strategies: 1) flipping the course where the students have to develop lectures to present to the class, 2) flipping the course where the students have to work as groups to develop study guides as activities during class time, 3) use the Design Review Simulator (DSR) to evaluate code compliance in residential housing. The use of DSR is particularly aligned to the course objective "Effectively identify code non-compliances and propose code approved solutions". The active learning interventions goal will be met through the inclusion of the study guides activities, students presenters, educational games, and VR experiences.

4.2. Assessments Used to Measure Students' Achievement of SLOs

Dr. Castronovo has assessed the impact of implementing software solutions on students’ problem-solving, analysis, and motivation through quantitative methods. Dr. Castronovo has measured students' motivation by using in-class feedback and end-of-class evaluations. Dr. Castronovo has evaluated the impact of the intervention on the students’ problem-solving skills, which require a variety of higher-order thinking skills as defined in Bloom’s revised taxonomy (Anderson and Krathwohl 2001). Dr. Castronovo has collected in-class activities and assignments to evaluate, in a quantitative manner, the students’ gain of problem-solving skills with the use of a rubric developed for the class. Lastly, Dr. Castronovo has leveraged in-class student evaluations to analyze the effectiveness of the implemented teaching methods. For example, CSUEB student evaluations request students evaluate the course by answering questions such as: “I was able to apply my learning through activities and assignments” or “In my experience, the instructor expressed an interest in students’ learning.”

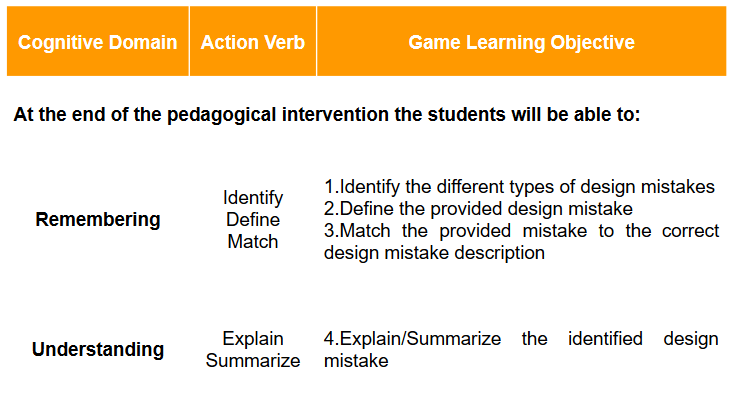

Additionally Dr. Castronovo has developed an assessment instrument to measure the impact that the DSR VR game has on the students' learning. To assess this learning Dr. Castronovo has set the following learning objectives for the game:

These learning objectives are particularly aligned to the course objective "Effectively identify code non-compliances and propose code approved solutions".

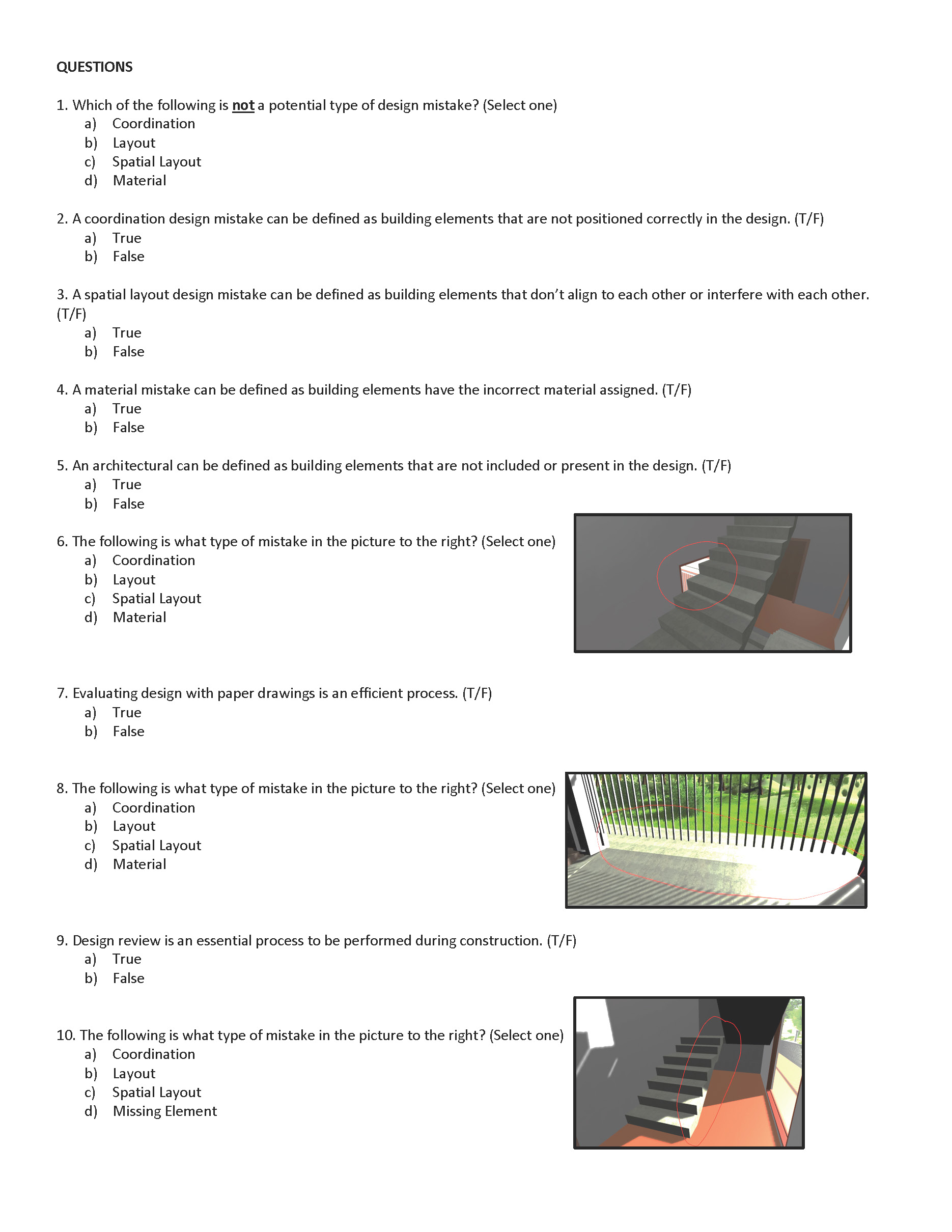

Assessment Quiz at the end of the VR Activity:

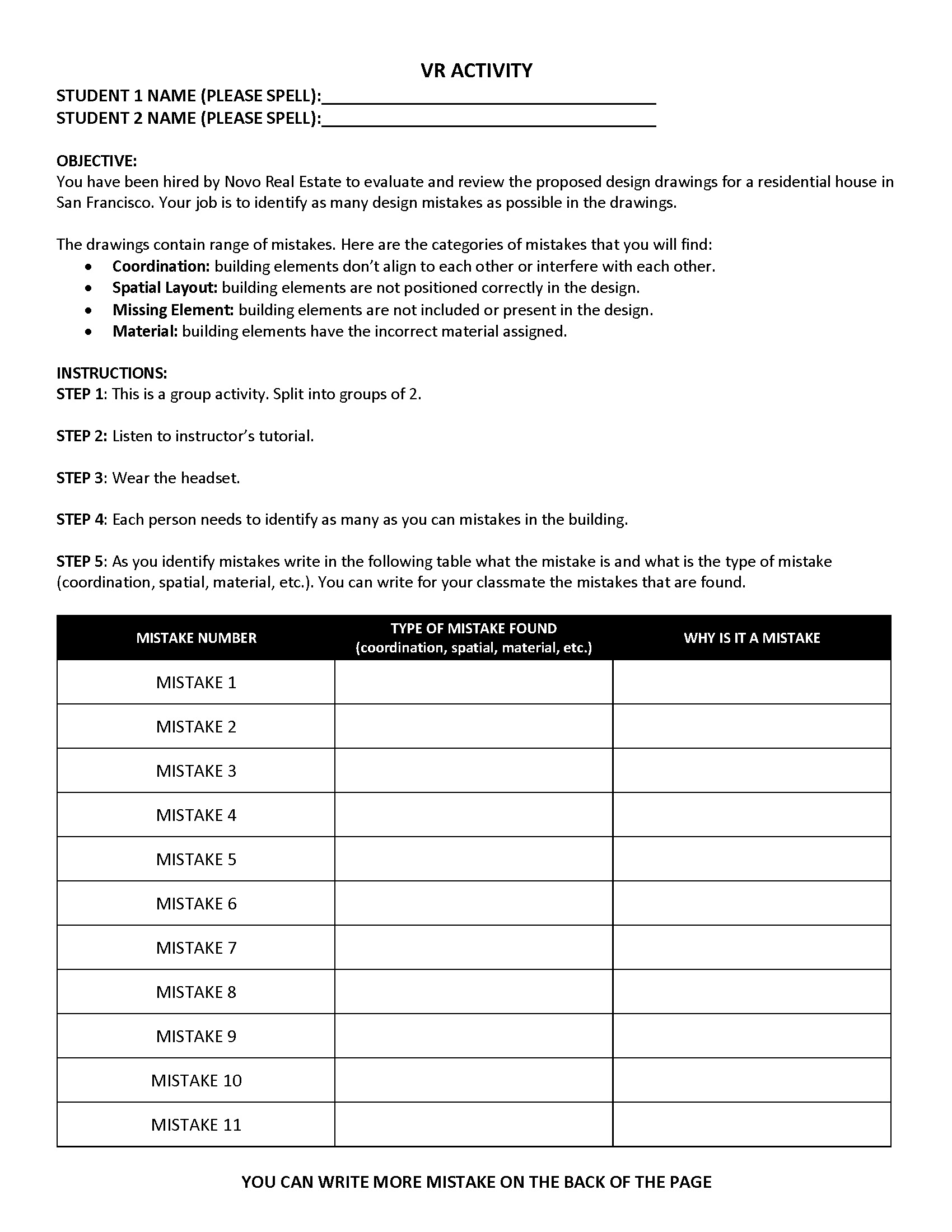

Student handout during the VR activity:

5. Accessibility, Affordability, and Diversity Accessibility

The use of VR can support students that have mobility disabilities in visualizing built environments that by not be ADA compliant or designed for their needs. Additionally, students that do not have access to this technology at home have access at any time outside class time to the equipment in our labs. The VR equipment can be used at any time under faculty of student assistant supervision. By introducing VR into the classroom students coming from underrepresented communities and minorities to directly engage with typically inaccessible technologies and to boost their sense of belonging and self-efficacy.

5.1. Affordability

The course is taught in the Visualization and Immersion Classroom (VIC), which is suited for the delivery of large-scale educational interventions. The VIC is a computer laboratory equipped with 48 computers, 10 of which are virtual reality ready high-end computers capable of running the latest industry-standard software. It also possesses ten virtual reality (VR) headsets for educational experiences in virtual spaces, capable of rendering virtual environments in real-time. The VIC is open to the students at any time outside class time. The VR equipment can be used at any time under faculty of student assistant supervision.

5.2. Diversity

Using strategies such as in-class hands-on activities, educational video games, and virtual reality, the redesign will positively disrupt the traditional classroom environment. This will be achieved by applying constructivist and social cognitive learning theories that promote learning-by-doing as a more effective, motivating, and engaging learning experience. Through these active learning opportunities, students will build their knowledge, confidence, and both time and social management skills to continue to succeed. Most importantly, introducing VR into the classroom will allow underrepresented communities and minorities to directly engage with typically inaccessible technologies and to boost their sense of belonging and self-efficacy. Furthermore, leveraging students from diverse backgrounds will enhance the functionalities of the VR environment to be more responsive to different potential interpretations and reactions to the design and problem presented.

6. About the Instructor

Hi! My name is Fadi Castronovo, I am an Assistant Professor of Engineering at Cal State East Bay. I received my doctorate in Architectural Engineering at Penn State with a minor in Educational Psychology. I am strongly focused on my teaching and research. In my teaching, I strive to provide an engaging and active learning experience to my students, by applying innovative technology and researched pedagogical interventions. I translate this passion for pedagogy in my research by evaluating the intersection of innovative technology and learning.

My research-informed teaching applies theories of active experience-based learning using innovative approaches. These approaches aim to reinforce students’ skills such as problem-solving and active learning. Using strategies such as in-class hands-on activities, multimedia tools, and educational video games, I apply constructivist and social cognitive learning theories. These theories promote learning-by-doing as a more effective, motivating, and engaging learning experience. Through these active and interactive teaching opportunities, students build their knowledge, confidence, and time and social management skills to continue to succeed in the competitive building industry. My approach to teaching students in construction disciplines directly responds to the AEC industry demand for our future workforce to have not only sufficient domain knowledge and technical skills but also strong interpersonal skills. In my teaching and mentoring, I prepare students for the ever-changing and demanding construction industry by challenging them to solve problems collaboratively, using the latest tools and the technology, while achieving environmental and quality demands.

Curriculum Vitae

Fadi Castronovo - CV

LIT Redesign Planning

Stage 3

7. Implementing the Redesigned Course What aspects of your course have you redesigned? Describe the class size(s) What technology is being used?

The Fall 2019 CMGT 345 had a total of 34 students. The goal of the redesign is to 1) include active learning interventions, 2) develop the Design Review Simulator VR software assessment material that is aligned to the course material, 3) evaluate the impact of such software in the classroom, and 4) engage undergraduate students in research activities. The redesign, of course, will include three main strategies: 1) flipping the course where the students have to develop lectures to present to the class, 2) flipping the course where the students have to work as groups to develop study guides as activities during class time, 3) use the Design Review Simulator (DSR) to evaluate code compliance in residential housing. The use of DSR is particularly aligned to the course objective "Effectively identify code non-compliances and propose code approved solutions". The active learning interventions goal will be met through the inclusion of the study guides activities, students presenters, educational games, and VR experiences.

7.1. Redesign Goal 1: Flipping the course where the students have to develop lectures to present to the class

Students groups were assigned with the following task: "The objective of this assignment is to gain knowledge and experience of the different ways to apply and evaluate buildings codes. You will be paired up in groups to develop a presentation on a chapter of the international building codes. Each student will be assigned a chapter of the international building codes (see list below). You are responsible for creating a short (20 minute) PowerPoint presentation about the assigned chapter of the international building codes that includes a description summarizing the introduction section and say what is the purpose of the chapter, and a real world example of the chapter. While the description of the book should be used as a starting point for the presentation, students should expand upon what is presented on the book through use of images, illustrations, and examples of the code. For instance, an example could include a description of the process for implementing of the code. The presentation will be evaluated on the student’s ability to convey the description of the code and the quality of the images, illustrations, and examples. The presentation file PPTX will be due right before the start of class in the BlackBoard submission location."

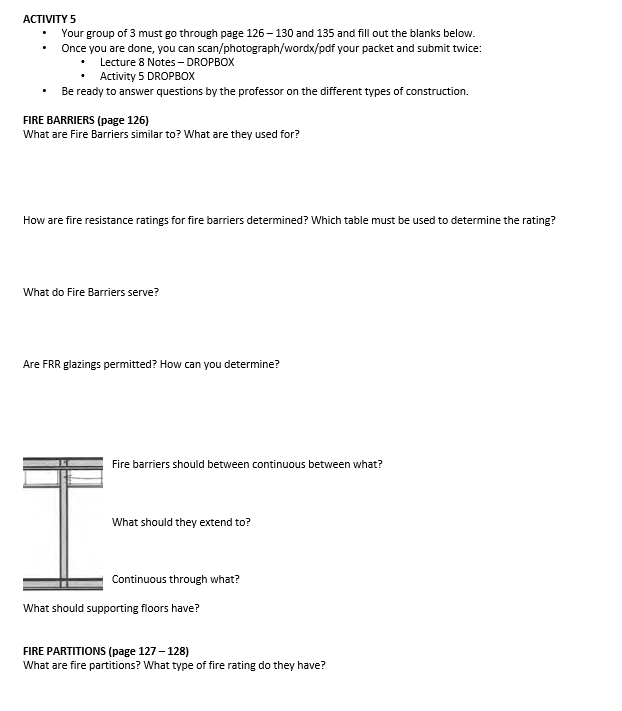

7.2. Redesign Goal 2: Flipping the course where the students have to work as groups to develop study guides as activities during class time

In addition to the students having to present they were challenged with a series of activities where they had to engage with the textbook to learn the building codes. Dr. Castronovo developed these in-class handouts for every chapter as a supplement to the students' lecture. These handouts were mandatory and had to be submitted to receive credit for the class activity. Additionally, the students had to find examples of the codes section in the building where the class was hosted.

7.3. Redesign Goal 3: Use the Design Review Simulator (DSR) to evaluate code compliance in residential housing

For the VR intervention Dr. Castronovo has developed the Design Review Simulator. The main learning objective for the game is for the students to learn to identify and evaluate the types of design-related and construction-related mistakes that can be found in the BIM model of a San Francisco-based townhouse. During the gameplay, the students wear an immersive VR headset and are asked to walk around townhouse and identify design mistakes pertaining to layout, material use and coordination issues, such as missing components. Bloom’s Taxonomy informed the development of the learning objectives with a specific focus on the cognitive domain. Within this cognitive domain, ‘remembering’ and ‘understanding’ were selected as the primary cognitive thinking skills levels. Each of these levels were operationalized through action verbs, such as define, explain, match, and identify, aligned with a cognitive level of thinking skills set by the taxonomy. The four resulting learning objectives for the DRS thus focused on the ability for the students to identify, describe, match and explain the types of design mistakes following the completion of the game. The game was designed to present the students with four types of design mistakes, each with a specific point associated with it. The point system was based on the severity of the type of mistake, including: (1) spatial layout, (2) coordination issues, (3) missing elements, and (4) improper material choices. Once in the game, the learners are first placed outside the townhouse and asked to look around and explore both inside and outside the building for any of the above types of issues. To identify and flag a design mistake, the students point the crosshair to a design mistake and, using a keyboard, press “enter”. If the mistake is correctly identified, the game displays a message with the type and description of the mistake.

Video - Example of Dr. Castronovo's Teaching

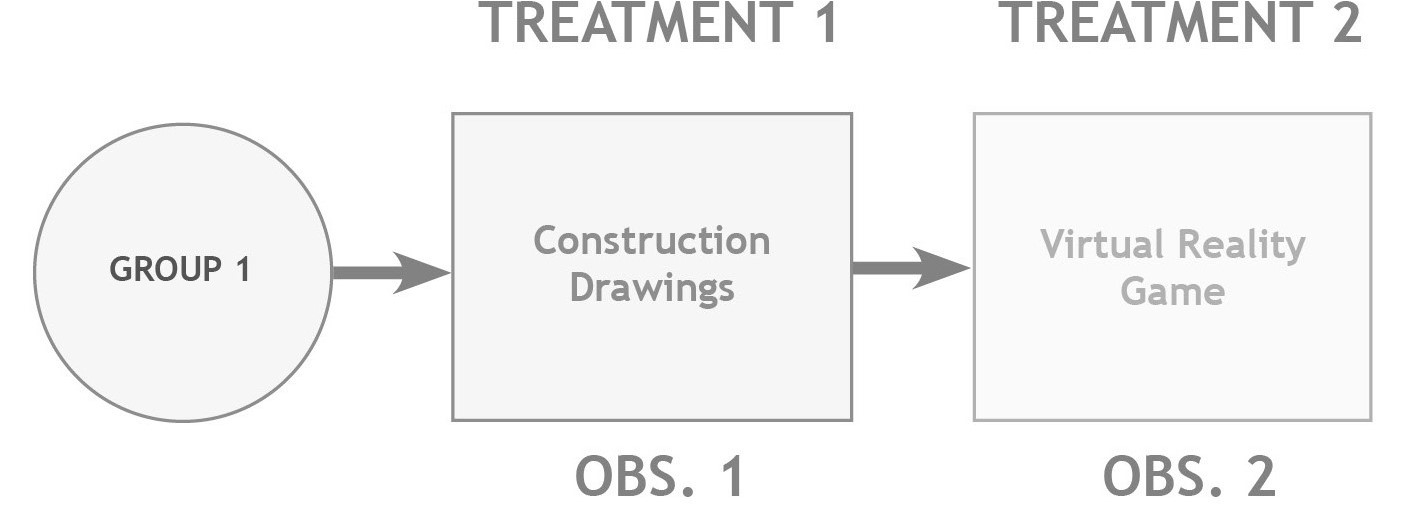

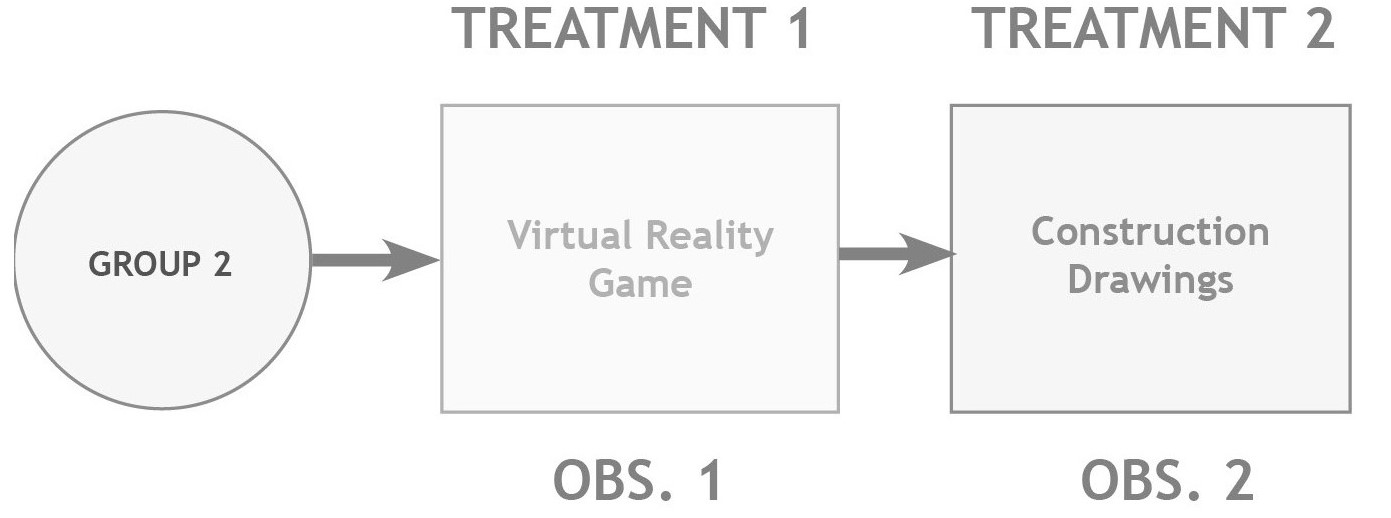

A mixed-design crossover repeated-measures experimental design was set up to determine the gains in problem-solving skills that construction students gained from playing the DRS. This design method was chosen to control the potential sequencing effects of an independent variable (i.e. the learning medium) and treatment contamination. This experimental design allows testing of both between-subject and within-subject differences (see figure below), as well as a comparison of the two groups at the time of the first treatment to test the groups’ equivalence. Based on this design, recruited students were randomly assigned to two groups. The groups were then exposed to an independent variable, type of learning medium, with two levels of treatment -- VR game versus paper-based construction drawings. The two treatments were administered on two consecutive days respectively, and at the same time for each group, though their sequence differed for the two groups. The first group used the construction drawings on the first day and the VR game on the second day, while for the second group the sequence was reversed. The variation in sequence between the drawings and VR game tests the possibility that student learning is affected by such order.

Figure - Experimental Design and Procedure

During the experiment, the students were grouped in pairs and asked to perform design reviews on the provided learning medium and document the types of mistakes on a handout that was the same for both experimental groups. On the handout, the student teams were asked to write the mistakes encountered (whether in VR or on the drawings). In particular, they had to write the mistake number, the type of mistake, and an explanation of why they chose that mistake type. The number of mistakes found by the students was used as the dependent variable of the experiment. At the end of each treatment the students were given an assessment instrument which included a set of ten multiple-choice or true-false types of questions (see assessment quiz in section 2), to test the students’ achievement of the game’s learning objectives. The number of mistakes and the assessment score was compared in a 2x2 mixed model ANOVA (observation: day 1, day 2) X (condition: drawings, VR game) with time as the repeated measure and condition as the between-subjects factor.

7.4. What professional development activities have you participated during your course redesign?

During the course redesign Dr. Castronovo attended the National Science Foundation Faculty Development Symposium 2019 at the Society of Hispanic Professional Engineers Annual Convention.

7.5. Which Additional Resources Were Needed for the Redesign?

Dr. Castronovo collaborated with Assistant Professor Patrick Brittle from Chico State University to collect a large sample size of data points. Prof. Brittle implemented the Design Review Simulator in his course which had an aligned learning objective. Through this collaboration the team was able to collect over 120 data points.

LIT Results and Findings

Stage 4

8. LIT Redesign Impact on Teaching and Learning

8.1. Assessment Findings

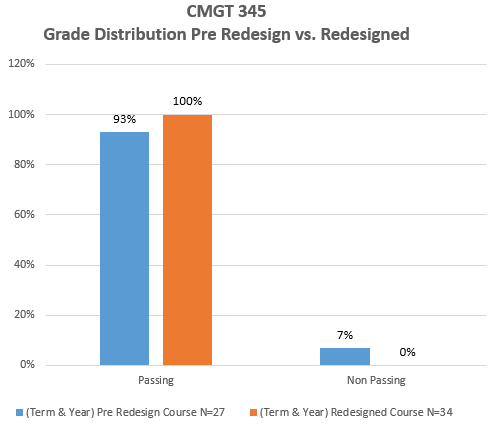

8.1.1. Grade Distribution

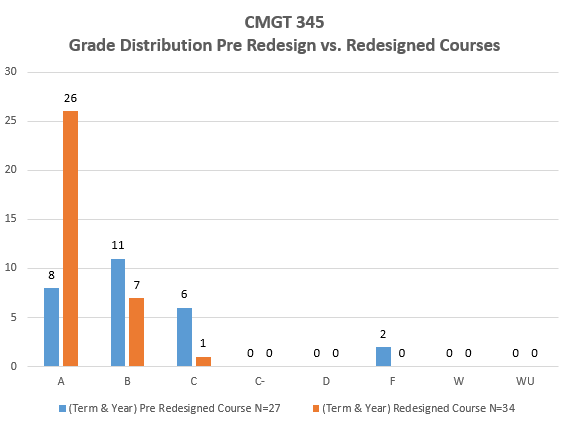

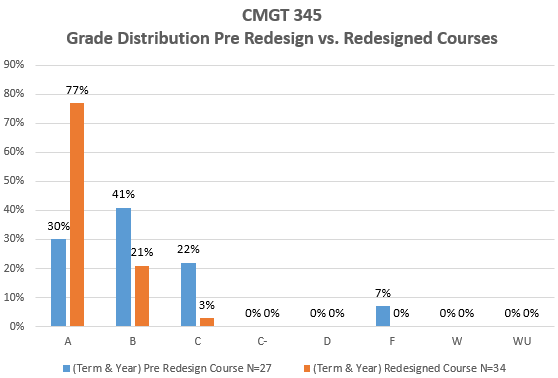

Based on the grade distribution from the CMTG 345 course redesign one can see that 77% of the class received an A, 21% of the class received a B, and 3% of the class received a C. Meanwhile, the course distribution of the course pre-design was 30% of the class received an A, 41% of the received a B, 22% received a C, and 7% of the class did not receive a passing grade. The grade distribution is a testimony on the impact of the course redesign strategies.

8.1.2. Redesign Goal 1: Flipping the course where the students have to develop lectures to present to the class

8.1.2. Redesign Goal 1: Flipping the course where the students have to develop lectures to present to the class

Dr. Castronovo submitted a mid-term course student evaluation to test the students’ perception of their class’ flipped course strategy’s value, engagement, and value of the student presentations. Based on a one-sample test (33 out of 34 students filled the evaluation) Dr. Castronovo found that the students’ evaluation was statistically significantly higher than 3 - Neutral for the value of having student-led presentations (t (32) = 7.23, p = 0.01 < 0.05 at 95%). The question was based on a Likert scale:

- How effective do you find the use of the weekly presentation assignment?

- Very effective

- Effective

- Neutral

- Not very effective

- Not at all effective

8.1.3. Redesign Goal 2: Flipping the course where the students have to work as groups to develop study guides as activities during class time

Dr. Castronovo submitted a mid-term course student evaluation to test the students’ perception of their class’ flipped course strategy’s of using in-class assignments. Based on a one-sample test (33 out of 34 students filled the evaluation) Dr. Castronovo found that the students’ evaluation was statistically significantly higher than 3 - Neutral for the value of the flipped course activities, engagement due to the flipped nature of the course (t (32) = 6.55, p = 0.01 < 0.05 at 95%). The question was based on a Likert scale:

- How effective do you find the in-class activities assignments?

- Very effective

- Effective

- Neutral

- Not very effective

- Not at all effective

8.1.4. Redesign Goal 3: Use the Design Review Simulator (DSR) to evaluate code compliance in residential housing

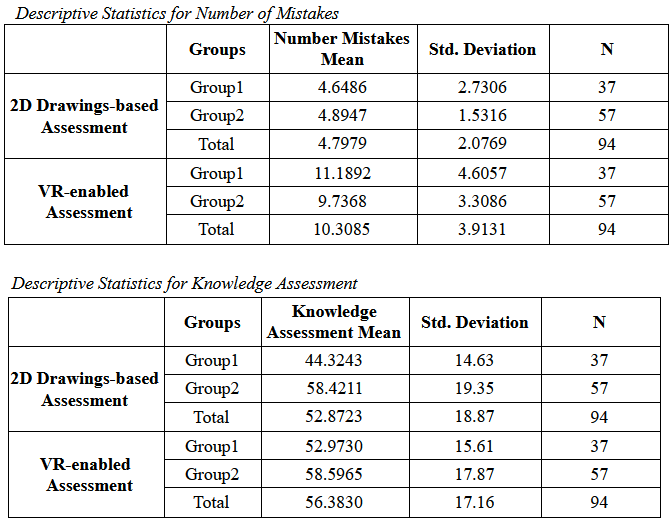

The results from the experiment can be found in the following tables. The tables list the two levels of the independent variable, the learning medium to perform design reviews as a within-subjects factor (2D drawings, VR game), the two dependent variables (knowledge assessment, number of mistakes), and order as the between-subjects factor (groups: Group 1, Group 2). For each of these variables, the means and standard deviation values are calculated. The data were analyzed using the statistical software package, IBM SPSS Statistics. In order to test the hypotheses, several analyses were conducted, such as two two-way mixed ANOVA followed by two independent T-Test and two paired-sample T-Tests. Two two-way mixed ANOVA were conducted to address the first and second hypothesis and whether the order of implementing the independent variable playing the DRS simulation game resulted in any difference in the dependent variables. In order to investigate further the group differences, two independent T-Test was performed to evaluate the difference between groups’ knowledge assessment scores. Two paired sample T-Tests were carried out to test the third and fourth hypothesis, that is if experiment resulted in higher knowledge or number of design mistakes identified.

8.1.4.1. IMPLEMENTATION ORDER ANALYSIS

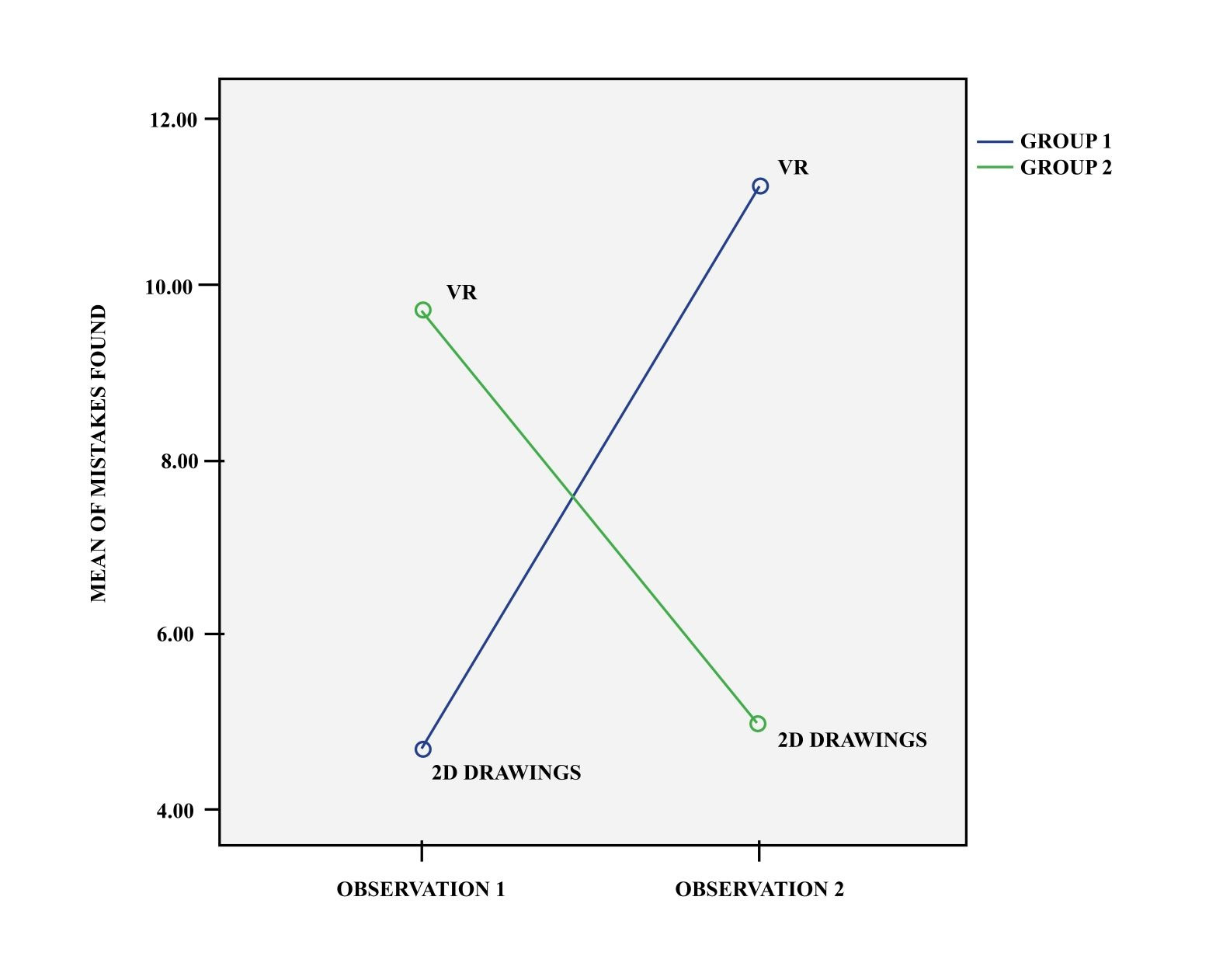

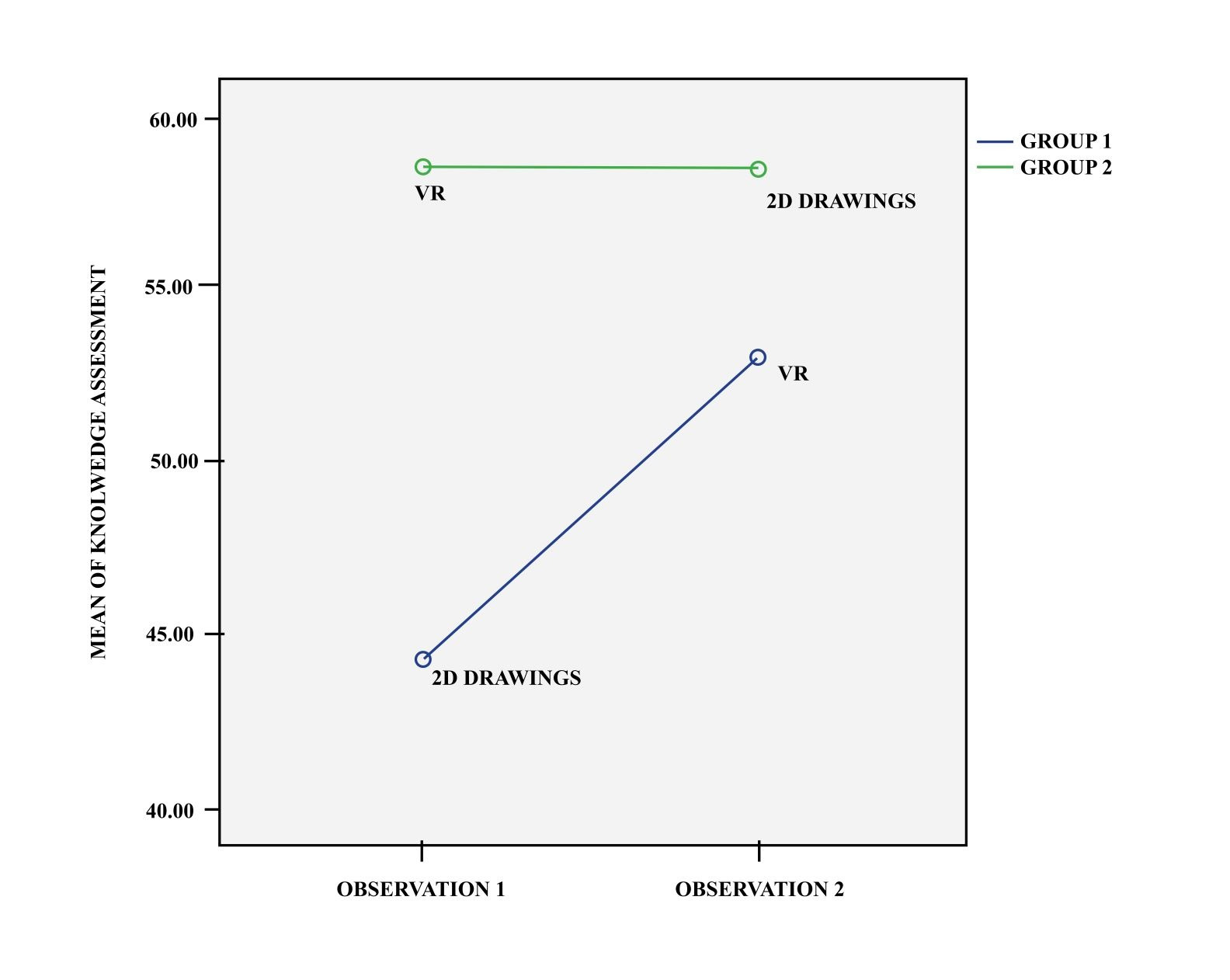

Dr. Castronovo aimed at testing hypothesis 1 and 2 which stated: “the order with which the representations modes (i.e. VR and 2D drawings) are implemented will not have an impact on the students’ ability to identify mistakes“ and “the order with which the representations modes (i.e. VR and drawings) are implemented will not have an impact on the students’ knowledge of the design review process“. In order to test these hypotheses, two Two-Way Mixed ANOVA (2x2) analyses were conducted for each dependent variable. The Two-Way Mixed ANOVA (2x2) analysis was conducted using the independent variable as a within-subjects factor (paper-based 2D drawings and DRS VR game), and order, as a between-subjects factor (Group 1: (1) drawings, (2) VR game; and Group 2: (1) VR game, (2) drawings). The interpretation of these analyses is facilitated by graphing the four mean values. These are depicted in the following figures Mean of Number of Mistakes Results, and Mean Knowledge Assessment Scores Results.

Figure - Mean of Number of Mistakes Results

The results of the Two-Way Mixed ANOVA analysis for the number of mistakes found by the students showed that there was a significant difference in the within-subject factor (F(1, 92) = 4.776, p = 0.049 < 0.05, partial η2 = 0.014), while there was not a significant difference in the between-subjects factor (F(1, 92) = 1.302, p = 0.257 > 0.05, partial η2 = 0.014). On the other hand, the results of the Two-Way Mixed ANOVA analysis for the knowledge assessment showed that there was a significant difference in both the within-subject factor (F(1, 92) = 4.79, p = .031 < 0.05, partial η2 = 0.5) and in the between-subjects factor (F(1, 92) = 10.069, p = 0.002 < 0.05, partial η2 = 0.099). Moreover, the partial η2 result for both ANOVA analysis indicates a middle effect of the sample size for the generalization of the results. In order to further analyze the within-subject factor results, further analysis was conducted. by performing paired sample T-Tests.

Based on these analyses, Dr. Castronovo failed to reject the null hypothesis that “the order with which the representations modes (i.e. VR and 2D drawings) are implemented will not have an impact on the students’ ability to identify mistakes” when looking at the number of mistakes identified by the students. This result illustrates that the order or between-subjects with which the independent variable, or medium, is implemented does not have an impact on the students’ ability to identify mistakes. However, there is a difference of the within-subject factor, illustrating a difference in the number of mistakes identified when using one of the independent variables versus the other.

However, Dr. Castronovo can reject the null hypothesis that “the order with which the representations modes (i.e. VR and 2D drawings) are implemented will not have an impact on the students’ knowledge of the design review process” when looking at the students’ knowledge assessment instrument scores. This result illustrates that the order with which the leaning medium is implemented does have an impact on the students’ knowledge of the design review process (i.e. remembering and understanding cognitive domains). Additionally, there is a difference in the students’ knowledge of the design review process when using one learning medium versus the other. Figure - Mean Knowledge Assessment Scores Results

Figure - Mean Knowledge Assessment Scores Results

Dr. Castronovo further analyzed the group differences by performing an additional independent T-Test analysis. In this analysis, Dr. Castronovo wanted to test if there was any significant difference between students’ final scores in the knowledge assessment after both treatment 1 and treatment 2. The independent sample T-Test showed that there was not a significant difference in the groups’ final scores, t (92) = 1.435, p = 0.155 > 0.05 at 95% confidence interval, with a Cohen’s d = 0.310. Cohen’s d indicates a middle effect of the sample size for the generalization of the results. The lack of significant difference in the final results illustrates that at the end of the experiment, students achieved the same level of knowledge regardless of the order of implementation.

Dr. Castronovo also wanted to investigate if there were any differences in the knowledge assessment scores for the students after they played the VR game, Group 1 VR scores versus Group 2 VR scores. The independent sample T-Test showed that there was not a significant difference in the groups’ final scores, t (92) = 1.5649, p = 0.121 > 0.05 at 95% confidence interval, with a Cohen’s d = 0.335. Cohen’s d indicates a middle effect of the sample size for the generalization of the results. The lack of significant difference in the final results illustrates that after playing the VR game, the students achieved the same level of knowledge regardless of the order of implementation.

Lastly, Dr. Castronovo also wanted to analyze if there were any differences in the knowledge assessment scores for the students after they performed the 2D drawings reading activity, Group 1 Drawings scores versus Group 2 Drawings scores. The independent samples T-Test showed that there was a significant difference in the groups’ final scores, t (92) = 3.782, p = 0.0003 < 0.05 at 95% confidence interval, with a Cohen’s d = 0.832. Cohen’s d indicates a large effect of the sample size for the generalization of the results. The significant difference of the final results illustrates that the students who played the VR game first achieved a higher assessment score than the students who played the VR game second.

8.1.4.2. MEETING LEARNING OBJECTIVES ANALYSIS

In addition to evaluating the effect of the implementation order on student learning, Dr. Castronovo was interested in testing if the students met the learning objectives set for the activity in the tables. Hypothesis 3, which stated that “students using the VR learning activity (DRS) will be able to recognize the same number of design mistakes as students using 2D drawings”, was tested first. To test this hypothesis a paired-sample T-Tests for the number of mistakes found by Group 1 was performed. For this analysis the assumption of normally distributed mean difference scores was examined and satisfied and the data were normally distributed. The results illustrated a significant difference in the students’ average number of identified mistakes in Group 1 when going from 2D drawings to VR, t (36) = 7.3243, p = 0.0001 < 0.05 at 95% confidence interval, with a Cohen’s d = 1.73.

Dr. Castronovo compared the means of the students’ knowledge results from Group 1. A paired-sample T-Test was performed to test hypothesis 4, which stated that: “students using the VR learning activity (DRS) will gain the same knowledge of the design review process, such as remembering and understanding, as students using 2D drawings”. For this analysis the assumption of normally distributed mean difference scores was examined and satisfied and the data was normally distributed. The analysis shows a significant growth in the students’ average score on the knowledge assessment instrument when going from 2D drawings to VR, t (36) = 2,4593, p = 0.01 < 0.05 at 95% confidence interval, with a Cohen’s d = 0.56. The sample size was found to be effective as shown by Cohen’s d.

9. Student Feedback

9.1 Quantitative Student Evaluations.

Because of the course redesign my course evaluations has also been excellent, with an average score of 3.58 out of 4 (89.5%), with a response rate higher that 90% for every class (33 out of 34 students filled the course evaluation). Additionally, when looking at two indicators that Dr. Castronovo found to be important, Dr. Castronovo received an average score of 3.5 out of 4 (87.5%) for the “I was engaged in learning as a result of the teaching methods used”, and an aggregate average score of 3.64 out of 4 (91%) for the “This course was a valuable learning experience for me”

9.2 Qualitative Student Evaluations.

Based on your experiences in this course, what aspects of the course contributed to your learning?

- The interactive activities, such as VR as well as the many presentations we did. Also, writing our own notes and the "teaching ourselves" method really helped a lot.

- The Instructor is very good at making the lectures interesting while engaging the class with class work.

- Anyone can read codes and learn them but the hard part for me was the organization of international, state, and local codes. This class and final project helped me understand how to navigate between each of them and find out whats been amended.

- I think that the portion of the notes where we filled in the blanks was really helpful to retaining the information.

- The lecture notes Dr. C hands out, the pace of the class, in class assignments

9.3. Challenges my Students Encountered

9.3.1. Qualitative Student Evaluations.

Based on your experiences in this course, what aspects of the course contributed to your learning?

- Filling in the blanks during presentations for the first couple of weeks made if difficult to retain much of the presentation at the time because you're just waiting for one word instead of listening to the lecture. The notes that resulted from it were very helpful though. I would just suggest giving the lecture and having students take notes while following along

- Let people do the VR individually if possible. I could've nailed that #1 score if I hadn't had a boat anchor with me.

- I felt that the presentations were not quite fair per team. Some teams had harder material than others.

10. Lessons Learned & Redesign Tips

10.1. Teaching Tips

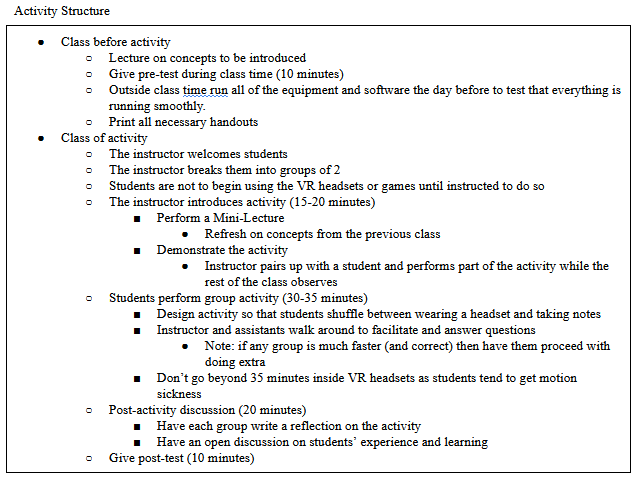

In the Fall of 2019, Dr. Castronovo successfully implemented the DSR game in the course. The VR experience was chosen for its alignment with the course modules’ topics and learning objectives. Dr. Castronovo learning, teaching, and assessment material to integrate the activities into the course. Based on the implementation, Dr. Castronovo identified several lessons learned for future implementations in the form of time structure for the activity, illustrated in the following table. Before the activity can be implemented, it is essential for the instructors to introduce to the students the necessary concepts. For example, for the lecture topic on design review, the students are lectured on concepts of design evaluation, review, and analysis. Before an instructor can get the students started, it was necessary to check that the software and hardware worked on all of the computers. Additionally, an instructor must have printed all of the instructional material handouts for the students to complete the activity. For the design review topic, the class was challenged to play the DSR, which challenged the students to evaluate the design of a house. Before students can play the DSR, the instructor must remind students of previously presented concepts. This is necessary to activate the students’ memory and prior knowledge.

The next step is for an instructor to break the class into groups of two, as indicated by the instructional material, and to allow students to work in a group problem-based environment. Dr. Castronovo found that engaging the students in paired group activities allowed for the students to collaborate and supported them in taking notes or complete any instructional material. Before the students can get started, it was found that an instructor must perform some “role-play” with the students and demonstrate how to perform the activity. For example, with the DSR, the instructor can pair with a student, wear a headset, and play the game with a student for a few minutes and instruct his/her/their teammate to write on the activity handout. During the role-play, the instructor invites the rest of the class to look at his/her/their group so that they understand the logistics of the activity. After the role-play, the instructor lets the students get started with their activity. During the activity, the instructor, and if available, their assistant must walk around continuously to ensure that any questions from the students are answered. For the VR activities, Dr. Castronovo found that limiting the gameplay to 35 minutes avoided the students from suffering from motion sickness.

At the end of the activity, the students must reflect on their activity. Dr. Castronovo found that the reflection can be structured by first getting the students to write on their handouts and then having an open discussion. In case the instructor wants to collect any research data, the pre-test is suggested to be given the class period before the activity and the post-test be administered right after the activity has ended. While these lessons learned were collected from the implementation, Dr. Castronovo believes that they are of extreme value not only for their own future applications but also for other instructors that are interested in using VR in their classes.

10.2. Course Redesign Obstacles

Due to this successful implementation, the next step in the research will be to evaluate the value of this curricular design. In the future will begin to evaluate the impact of the inclusion of this technology on students’ learning as it relates to their self-efficacy, motivation, degree of engagement, and sense of belonging. The evaluation plan will entail a series of pre-test and post-test experiments. All students will receive pre-test material based on the dependent measures, which include self-efficacy, motivation, degree of engagement, and sense of belonging. These measures have already been constructed and validated and will provide a baseline. Dr. Castronovo will aim at using the surveys developed by the Lawrence Hall of Science (LHS) Activation Lab.

While VR has been evaluated through case-by-case research methodology, further research needs to be conducted to analyze its impact on an engineering curriculum-wide implementation. In particular, there is research potential to evaluate the impact that VR has on closing the achievement gap and transforming the culture of learning in engineering programs. Previous research on a curriculum-wide level has been performed in other fields such as medicine, but not in engineering education. Therefore, Dr. Castronovo wants to address this issue by implementing technologies such as VR in an engineering curriculum. With this research opportunity, Dr. Castronovo has decided to spearhead the implementation of VR in the entire engineering curriculum at his institution.

10.3. Sustainability

The growing commercialization of VR technology has decreased its cost, leading to easier deployment in the classroom environment. Since the wireless Oculus Quest VR headset has become significantly more affordable and does not need to be attached to a VR-ready desktop, instructors can perform large-scale VR activities with a lower budget. The return on the upfront investment in purchasing a set of VR headsets is high, given the expected frequency of use and an average lifetime of four to five years. With this reduction in cost, other institutions can easily deploy VR activities in their classrooms, making the development of VR laboratories easily scalable and replicable.

11. Instructor Reflection

11.1. DISCUSSION OF RESULTS

The presented redesign aimed at evaluating the impact of flipping the course and implementing an educational virtual reality game for reviewing design in a classroom environment. The impact of the student lectures and flipped course activities has shown to be valuable and impactful. In particular, Dr. Castronovo wanted to evaluate the impact of the DRS on the students’ ability to identify design mistakes during the process of design review for a sample building. Furthermore, the goal of this study was to assess the DRS game’s ability to support student learning and development of design review skills. Lastly, Dr. Castronovo wanted to evaluate the implementation procedure that would maximize the students’ learning. To address the goals, Dr. Castronovo set forward a series of research questions and tested several hypotheses.

1) What implementation procedure order yields the highest educational impact on students’ ability to identify mistakes? For the first question, no statistical difference in the group’s means was found to support the null hypothesis that there is no difference in the order with which the treatments are implemented. Thus, regardless of whether the students were introduced to the DRS or 2D drawings first, the order did not yield a difference in their ability to identify mistakes. While this result could lead me to conclude that the order of implementation does not have an impact, the analysis of the students’ knowledge brings a different outcome.

2) What implementation procedure order yields the highest educational impact on students’ knowledge of the design review process? Unlike the results from the first research question, the analysis for the second question found there was a statistical difference in the group’s means of their knowledge assessment. The analysis’ result rejects the null hypothesis that the order did not have an impact on the students’ knowledge of the design review process. Therefore, the analysis illustrates that the order with which the DRS is implemented has an impact on the students’ knowledge and design review-related cognitive domains. A significant difference in the students’ knowledge was found when they were introduced to the 2D drawings first, followed by the DRS. At the same time, the results illustrate that the students were able to transfer their skills onto the drawings when they played the DRS game first. The implication of the findings for the instructors for implementing the DRS game is that they could use it in the classroom before they use 2D drawings. In fact, playing the DRS first would not only allow students to achieve the highest knowledge assessment regarding the design review process, but it would support them in transferring such knowledge when evaluating 2D drawings.

3) Does playing the DRS simulation game lead construction students to identify a higher number of design mistakes than evaluating design on the drawings? The results revealed that the students found a significantly higher number of mistakes when playing the DRS game compared to 2D drawings. At the same time, after playing the DRS game students found a significantly lower number of mistakes when evaluating drawings. This is possibly due to the 2D drawings’ inherent lack of a third dimension for evaluation and the additional effort the students needed to put forth when referencing elevations and sections drawings of the building. The implication of the findings is that the first person experience and full immersion acted as valuable spatial and perception cues for the students to be able to find a higher number of mistakes.

4) Does playing the DRS simulation game lead construction students to gain a higher knowledge of the design review process and related cognitive domains, such as remembering and understanding, than evaluating design on the drawings? For the fourth question, the data analysis shows that there is a significant difference in Group 1 students’ knowledge scores when evaluating 2D drawings first. Group 1, who first performed 2D drawing evaluations, significantly improved their scores when they used the DRS. This illustrates that the DRS can support students in getting a higher score on knowledge assessments than when evaluating 2D drawings. Dr. Castronovo further investigated if the students’ final knowledge scores were different between the groups. In particular, Dr. Castronovo tested if Group 1 final score after playing the DRS was different from Group 2 final score who performed the drawings’ evaluation last. The final scores were not significantly different. This result supports the second research questions’ finding on which order of implementation is best suited from the classroom. In particular, it illustrates that the students from Group 2 not only transferred their knowledge from the DRS to the drawings, but their score was also not significantly different from the students’ Group 1 highest score.

11.2. CONCLUSION

The growing implementation of active and experiential learning methods in construction engineering education has led to the adoption of innovative technology in the classroom. One particular technology that is under scrutiny by several disciplines is VR. Meanwhile, VR has illustrated its value in performing design reviews in the industry. However, VR benefits still have to be investigated in construction pedagogy. To address this research potential, Dr. Castronovo wanted to investigate the impact of an educational virtual reality game on learners’ design review knowledge and skills. Based on the study presented, Dr. Castronovo was able to test the effects of an educational VR game, the Design Review Simulator, on students’ achievement of a set of learning objectives such as to identify design mistakes and to match them to the correct mistake type. Additionally, Dr. Castronovo evaluated the optimal implementation procedure to achieve the highest educational impact also in terms of design review-related knowledge assessment.

The research results contribute to the growing knowledge base on the implementation of VR in the classroom. In particular, the research illustrated that the benefits of VR found in the construction industry in terms, for example, of improved communication, user involvement and feedback collection, could be translated into the classroom environment. Based on the results, Dr. Castronovo found that the DRS significantly supported the students in improving their skills in identifying mistakes and increased their knowledge of the design review process. Additionally, the analysis showed that the DRS could be implemented in an integrated manner with traditional representations; for example, if students use DRS before they are exposed to construction drawings, they will retain and transfer their knowledge when evaluating paper-based documentations such as 2D drawings. These findings are in line with other research in construction education, which have found that construction educational games supported students in achieving learning objectives and transferring them into other media.

On the other hand, these findings do not advocate for the instruction of design review skills though VR solely. In fact, the use of 2D drawings before VR could fit with the theory of Productive Failure. Kapur and Bielaczyc believed that instructors must introduce students to complex problems to solve other problems, even if they were not able to succeed in solving them. Therefore, if an instructor wanted students to first use 2D drawings, they could be a primer or scaffold for the VR experience.

In addition to the scientific contributions, Dr. Castronovo also developed a free to use open-source VR game and implementation educational material that future researchers and instructors can use in learning activities and for further research on that topic.

Furthermore, the current study presents a large sample size that supports the generalization of the results. However, additional research must be performed to further investigate the value of VR games in construction education. In future research, future medium differences could be investigated; for example, comparing other immersive and non-immersive virtual environments would support me in investigating the value of full-immersion in supporting design review learning. Additionally, Dr. Castronovo is interested in evaluating the impact of VR games in projects beyond residential housing to larger scope facilities, such as other building types or civil projects. Lastly, Dr. Castronovo would like to challenge learners in not only identifying design mistakes but also propose solutions to the problems found so as to evaluate the role of VR in problem-solving skills as well. Therefore, Dr. Castronovo wants to go beyond Bloom’s lower-order thinking skills such as remembering and understanding, which are within the scope of this paper, and investigate the game’s impact on higher-order cognitive domains, such as analysis and evaluation.